Billed as the premier conference for Augmented Reality (AR), Mixed Reality (MR) and Virtual Reality (VR), IEEE ISMAR was the perfect location for ATLAS community members to showcase their work this month.

ATLAS PhD students Rishi Vanukuru, Torin Hopkins and Suibi Che-Chuan Weng attended ISMAR 2023 in Sydney, Australia, from October 16-20, along with leading researchers in academia and industry.

ATLAS PhD students Rishi Vanukuru, Torin Hopkins and Suibi Che-Chuan Weng attended ISMAR 2023 in Sydney, Australia, from October 16-20, along with leading researchers in academia and industry.

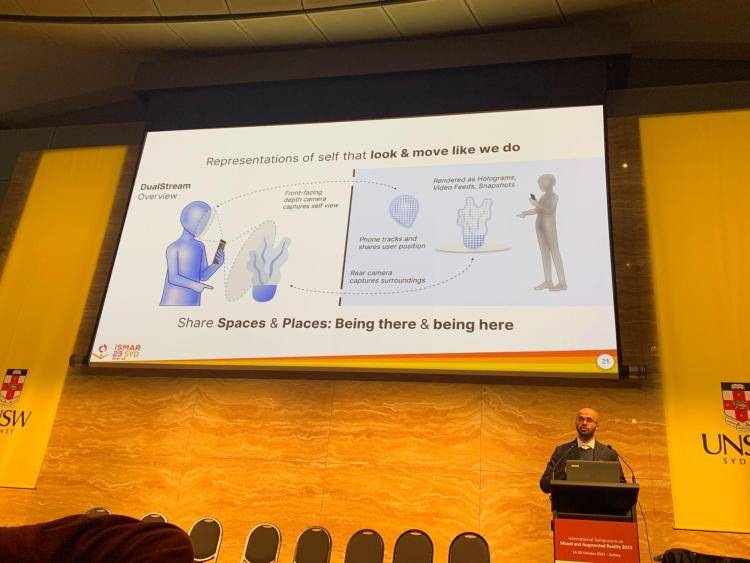

Vanukuru presented his work on DualStream, a system for mobile phone-based spatial communication employing AR to give people more immersive tools to “share spaces and places.” He also participated in the “1st Joint Workshop on Cross Reality” with his research on using mobile devices to support collaboration.

Meanwhile, Hopkins and Weng displayed their respective research on improving ways for musicians to collaborate remotely.

Research ATLAS PhD students presented at ISMAR 2023

DualStream: Spatially Sharing Selves and Surroundings using Mobile Devices and Augmented Reality

Rishi Vanukuru, Suibi Che-Chuan Weng, Krithik Ranjan, Torin Hopkins, Amy Banić, Mark D. Gross, Ellen Yi-Luen Do

Abstract: In-person human interaction relies on our spatial perception of each other and our surroundings. Current remote communication tools partially address each of these aspects. Video calls convey real user representations but without spatial interactions. Augmented and Virtual Reality (AR/VR) experiences are immersive and spatial but often use virtual environments and characters instead of real-life representations. Bridging these gaps, we introduce DualStream, a system for synchronous mobile AR remote communication that captures, streams, and displays spatial representations of users and their surroundings. DualStream supports transitions between user and environment representations with different levels of visuospatial fidelity, as well as the creation of persistent shared spaces using environment snapshots. We demonstrate how DualStream can enable spatial communication in real-world contexts, and support the creation of blended spaces for collaboration. A formative evaluation of DualStream revealed that users valued the ability to interact spatially and move between representations, and could see DualStream fitting into their own remote communication practices in the near future. Drawing from these findings, we discuss new opportunities for designing more widely accessible spatial communication tools, centered around the mobile phone.

Exploring the use of Mobile Devices as a Bridge for Cross-Reality Collaboration [Workshop Paper]

Rishi Vanukuru, Ellen Yi-Luen Do

Abstract: Augmented and Virtual Reality technologies enable powerful forms of spatial interaction with a wide range of digital information. While AR and VR headsets are more affordable today than they have ever been, their interfaces are relatively unfamiliar, and a large majority of people around the world do not yet have access to such devices. Inspired by contemporary research towards cross-reality systems that support interactions between mobile and head-mounted devices, we have been exploring the potential of mobile devices to bridge the gap between spatial collaboration and wider availability. In this paper, we outline the development of a cross-reality collaborative experience centered around mobile phones. Nearly fifty users interacted with the experience over a series of research demo days in our lab. We use the initial insights gained from these demonstrations to discuss potential research directions for bringing spatial computing and cross-reality collaboration to wider audiences in the near future.

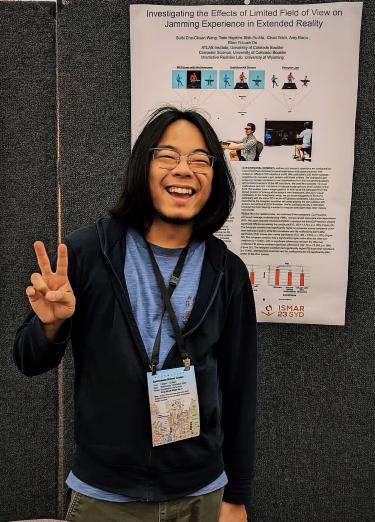

Investigating the Effects of Limited Field of View on Jamming Experience in Extended Reality [Poster Paper]

Suibi Che-Chuan Weng, Torin Hopkins, Shih-Yu Ma, Chad Tobin, Amy Banić, Ellen Yi-Luen Do

Abstract: During musical collaboration, extra-musical visual cues are vital for communication between musicians. Extended Reality (XR) applications that support musical collaboration are often used with headmounted displays such as Augmented Reality (AR) glasses, which limit the field of view (FOV) of the players. We conducted a three part study to investigate the effects of limited FOV on co-presence. To investigate this issue further, we conducted a within-subjects user study (n=19) comparing an unrestricted FOV holographic setup to Nreal AR glasses with a 52◦ limited FOV. In the AR setup, we tested two conditions: 1) standard AR experience with 52◦-limited FOV, and 2) a modified AR experience, inspired by player feedback. Results showed that the holographic setup offered higher co-presence with avatars.

Networking AI-Driven Virtual Musicians in Extended Reality [Poster]

Torin Hopkins, Rishi Vanukuru, Suibi Che-Chuan Weng, Chad Tobin, Amy Banić, Mark D. Gross, Ellen Yi-Luen Do

Abstract: Music technology has embraced Artificial Intelligence as part of its evolution. This work investigates a new facet of this relationship, examining AI-driven virtual musicians in networked music experiences. Responding to an increased popularity due to the COVID-19 pandemic, networked music enables musicians to meet virtually, unhindered by many geographical restrictions. This work begins to extend existing research that has focused on networked human-human interaction by exploring AI-driven virtual musicians’ integration into online jam sessions. Preliminary feedback from a public demonstration of the system suggests that despite varied understanding levels and potential distractions, participants generally felt their partner’s presence, were task-oriented, and enjoyed the experience. This pilot aims to open opportunities for improving networked musical experiences with virtual AI-driven musicians and informs directions for future studies with the system.