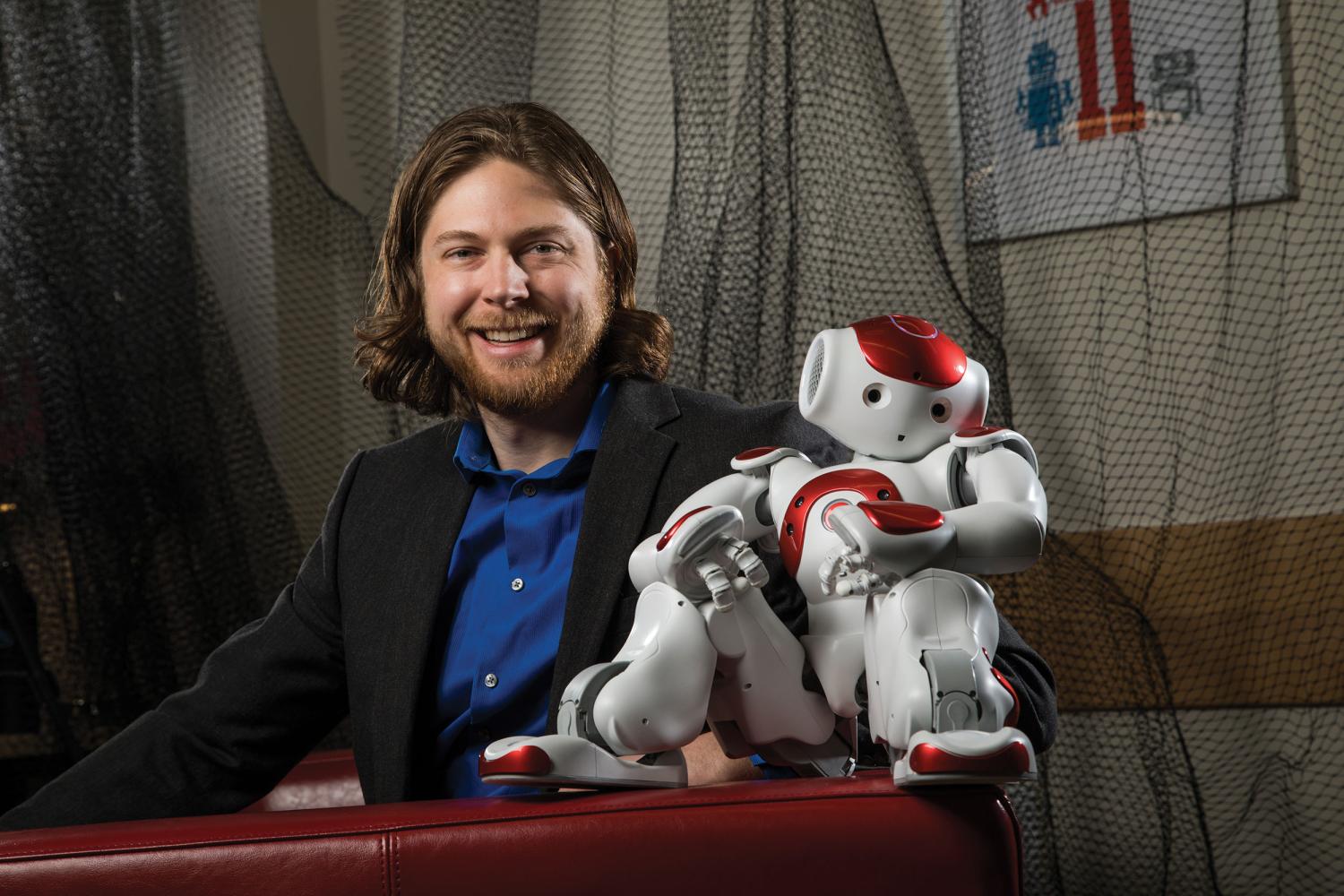

The Robot Whisperer

Robots could someday be found making beds at understaffed nursing homes, helping homeowners with DIY projects or handling mundane chores on the International Space Station.

But for that day to arrive, they have to become better communicators, says Dan Szafir, a professor in CU Boulder’s Department of Computer Science.

“As people, we are coded to use nonverbal cues and we are very good at untangling what they mean,” Szafir says. If you’re working on a car with a friend you might say “Grab that wrench,” while glancing at the one you want. But if you were working with a robot, that glance would be lost on it—you’d have to verbally spell it out.

Szafir videotapes human co-workers while documenting their facial expressions, gestures and changes in tone of voice. He’ll use the data to develop computer models to program more intuitive robots. He’s also exploring ways to design robots whose movements are less erratic and who are cognizant of personal space.

“They can be loud, robotic-looking and hard to predict,” he says. “People find that unsettling.”

Principal Investigator:

Dan Szafir

Funding:

National Aeronautics and Space Administration (NASA); National Science Foundation (NSF); Intel

Collaboration/Support:

Computer Science; ATLAS Institute